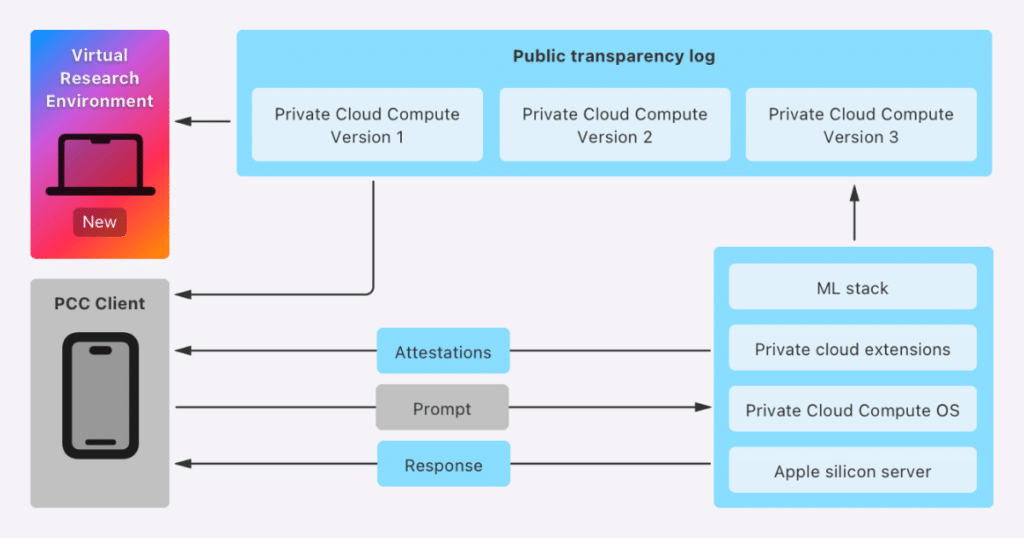

Apple has introduced a new cloud intelligence system called Private Cloud Compute, designed to handle artificial intelligence processing while maintaining user privacy. Private Cloud Compute ensures that AI data processed in the cloud remains secure, addressing concerns about potential data exposure. To further build trust in this system, Apple has allowed security and privacy researchers to verify the security of Private Cloud Compute, offering resources like the Private Cloud Compute Virtual Research Environment (VRE).

The VRE, now available to the public, lets security researchers and anyone with technical curiosity inspect how Private Cloud Compute functions and assess its security claims. It provides tools for inspecting PCC software releases, verifying the transparency log, booting a release in a virtualized environment, and running deeper investigations. Researchers can also modify and debug PCC software to explore potential vulnerabilities. The VRE requires a Mac with Apple silicon and at least 16GB of unified memory and is available in the macOS 18.1 Developer Preview.

Apple has taken this initiative further by expanding its Apple Security Bounty program to include Private Cloud Compute. Security researchers who find vulnerabilities that compromise the privacy and security guarantees of the system can earn rewards ranging from $50,000 to $1 million. The categories in the bounty program align with potential risks detailed in Apple’s Private Cloud Compute Security Guide, which outlines the architecture, authentication methods, and how the system’s security holds up to various attack scenarios.

To support deeper analysis, Apple has also released the source code for select components of Private Cloud Compute under a limited-use license. This includes projects like CloudAttestation, which ensures the validity of PCC node attestations, and Thimble, which verifies transparency on user devices. These resources enable researchers to analyze the privacy and security of Private Cloud Compute more thoroughly.

Apple designed Private Cloud Compute with end-to-end encryption to ensure that data processed in the cloud remains inaccessible to anyone but the user. Even Apple itself cannot view the data. This level of privacy was a core promise when Apple announced the system, and the company has now made it easier for independent researchers to verify those claims. Early access to these tools was granted to select researchers following the announcement of Apple Intelligence in July. Now, with the resources made public, anyone can participate in testing the security and privacy of Private Cloud Compute.

In a blog post titled “Security research in Private Cloud Compute,” Apple emphasized its commitment to transparency by inviting researchers to use the VRE and the associated tools to perform independent security analyses. Apple also believes that Private Cloud Compute represents the most advanced security architecture ever deployed for cloud AI compute at scale and aims to continuously improve its security with the help of the research community.

Subscribe to our email newsletter to get the latest posts delivered right to your email.